We know the digital twin as a combination of one or more data sets and a visual representation of the physical object it mirrors. Digital twins are morphing to meet the practical needs of users. In asset-heavy industries, optimizing production, improving product quality, and predictive maintenance have all amplified the need for a digital representation of both the past and present condition of a process or asset.

An

industrial digital twin is the aggregation of all possible data types

and data sets, both historical and real-time, directly or indirectly

related to a given physical asset or set of assets in an easily

accessible, unified location.

The collected data must be trusted and contextualized, linked in a

way that mirrors the real world, and made consumable for a variety of

use cases.

Digital twins must serve data in a way that aligns to how operational decisions are made. As a result, you may need multiple twins, as the type and nature of decisions are different. A digital twin for supply chain, one for different operating conditions, one that reflects maintenance, one that's for visualization, one for simulation—and so on.

What this shows is that a digital twin isn't a monolith, but an ecosystem. To support that ecosystem, you company/group need an efficient way of populating all the different digital twins with data in a scalable way.

IGEO/CSIC spanish national research centre in Spain, SJSU who lead the development of WRF-Sfire in USA, USFS-RMRS who gave a presentation on the US effort to address more detail on their initiative on advancing fire management strategies, ADAI the coordinator of Fireurisk project, the Lisbon University who presented a detailed study of one of the worst fire episodes in Portugal, the Corsica University which is leading the development of a new fire simulation model coupled with a European atmosphere model such as MesoNH and collaborating in several European projects such as FireRES, the Aveiro University whose development of an operational service to simulate smoke dispersion in forest fires and its possible impact on nearby populations can help in the management of these events, the UPC from Catalonia that focused on the development and application of infrared (IR) measurement techniques for studying fire dynamics and plume behavior, and to EVIDEN, ICCS and MeteoGrid who gave an overview of the progress made in HiDALGO2 in the simulation with WRF-Sfire, in the analysis of its scalability in HPC environments and in the data management and orchestration services.

HiDALGO 2 focused on addressing global societal challenges such as disaster resilience, climate change, and environmental sustainability through high-performance computing (HPC) and advanced simulation techniques. By leveraging European HPC infrastructure under the EuroHPC Joint Undertaking, HiDALGO 2 aims to deliver scalable and efficient computational tools to support decision-making processes in critical areas like disaster response, renewable energy, urban air quality, and wildfire management.

Key highlights include:

1. Technological goals, such as development of exascale-ready applications and optimization of simulation codes for multi-GPU architectures or bridging skill gaps in computational science to foster the adoption of HPC in academia, industry, and government;

2. Use Cases, including Urban Air Quality for real-time digital twins for air quality, wind, and urban comfort, providing actionable insights for city planning at resolutions of 1 m over 2x2 km domains, Renewable Energy for High-resolution modelling of wind and solar energy systems, including mesoscale-to-microscale downscaling to enhance energy production forecasts, Material Transport for the Simulation of sediment and pollutant transport in rivers, coupling hydrodynamics and thermodynamics with pollutant dynamics, and Wildfires for the integrated modelling of fire spread, atmospheric interactions, and smoke transport, using virtual reality and Unreal Engine for immersive visualization, and;

3. Innovative Visualization: Advanced volumetric rendering of simulation outputs in Unreal Engine and other platforms, enabling real-time, intuitive representation of complex data for stakeholders. HiDALGO 2 emphasizes the integration of HPC, artificial intelligence, and computational fluid dynamics to tackle large-scale, high-resolution phenomena. By fostering collaboration among leading European institutions, the project aspires to drive advancements in environmental modelling, improve disaster response capabilities, and promote sustainability.

- Introduce the objectives and challenges of building thermal regulations.

- Understand how to calculate the thermal balance of a room using manual methods.

- Acquire or update knowledge to solve thermal problems.

Dynamic

Energy Simulation with Indoor Air Quality Models

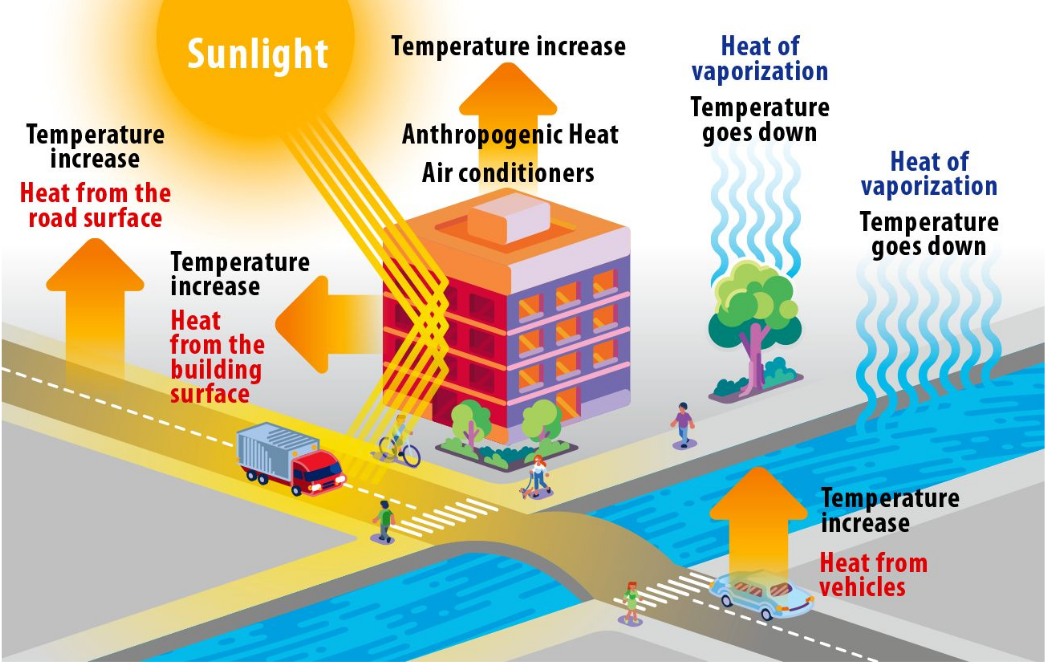

The HiDALGO2 Urban Building Pilot integrates Dynamic Energy Simulation with indoor air quality models for urban buildings, leveraging advanced algorithms and high-performance computing. Focused on both city-scale and building-scale analyses, it aims to enhance energy efficiency, comfort, and sustainability. This project aligns with global initiatives like the European Green Deal, emphasizing a holistic approach to urban environmental challenges.

This training course will focus on the following points:

Objectives:

- Enhance energy performance, indoor air quality, and human comfort in urban buildings.

- Focus on computational methods for modeling and optimizing urban environments.

Technical Workflow and Challenges:

- Comprehensive workflow integrating mesh generation, parallel mesh adaptation, and multi-fidelity models.

- Challenges in large-scale mesh generation for accurate computational modeling.

- Development of scalable solutions for complex urban building simulations.